Overconfidence has long been acknowledged as a serious problem in judgment and decision-making. Overconfidence, also known as confidence bias, can even foster delusional thinking, which is common in people with schizophrenia.

“Overconfidence occurs when individuals subjectively assess their aptitude to be higher than their objective accuracy [and] has long been recognized as a critical problem in judgment and decision making,”

said Dr. Cristina Mendonça, one of the lead authors of a new study on the phenomenon.

Previous research has shown that miscalibrations in the internal representation of accuracy can have serious consequences, but determining how to measure these miscalibrations is far from simple, and there are multiple debates regarding the cause of the phenomenon of overconfidence.

Overconfidence Ratio Metric

According to evolutionary psychology models, overconfidence maximizes individual fitness, and populations tend to become overconfident as long as the benefits from contested resources are sufficiently large in comparison to the cost of competition. Confidence can be a self-fulfilling prophecy, as those lacking it may fail because they lack it, and those with it may succeed because they have it rather than because of innate ability or skill.

Overconfidence, however, may be especially significant in the area of scientific knowledge, as a lack of awareness of one’s own ignorance can influence behaviours, pose risks to public policies, and even jeopardize health.

For the current study, researchers investigated four large surveys done in Europe and the United States over a 30-year period in order to construct a novel confidence metric that would be indirect, independent across scales, and relevant to a wide range of scenarios.

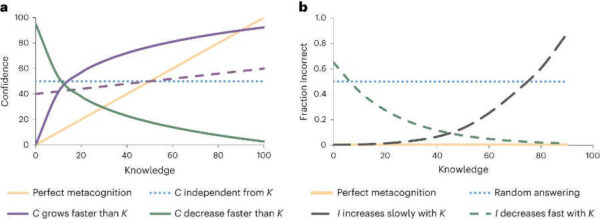

The research team used surveys with the format “True,” “False,” and “Don’t Know” and devised an overconfidence metric based on the ratio of incorrect to “Don’t Know” answers, positing that incorrect answers could indicate situations in which respondents thought they knew the answer but were mistaken, demonstrating overconfidence.

“This metric has the advantages of being easy to replicate and not requiring individuals to compare themselves to others nor to explicitly state how confident they are,”

Dr. Mendonça said.

Negative Attitudes Towards Science

The studies indicated two significant findings. First, overconfidence grew faster than knowledge, peaking at middle levels of understanding. Second, those with intermediate understanding and great confidence had the least favourable attitudes toward science.

“This combination of overconfidence and negative attitudes towards science is dangerous, as it can lead to the dissemination of false information and conspiracy theories, in both cases with great confidence,”

André Mata, one of the authors, said.

To validate their findings, the researchers created a new survey, quantitatively analyzed the work of other colleagues, and used two direct, non-comparative trust metrics, which confirmed the trend that trust grows faster than knowledge.

Science Communication Impacts

These findings have far-reaching implications and call into question commonly held beliefs about science communication strategies.

Science communication and outreach frequently focus on the simplification of scientific information for a wider audience. While simplifying material may provide a minimum level of understanding, it may also raise overconfidence gaps among those with some limited expertise.

“There is a common sense idea that ‘a little knowledge is a dangerous thing’ and, at least in the case of scientific knowledge that might very well be the case,”

said study coordinator Dr. Gonçalves-Sá.

Unintended Results

The study overall suggests that trying to spread knowledge without also trying to make people aware of how much there is still to learn can have unintended results.

Additionally, given that they comprise the majority of the population and typically have the least favourable attitudes toward science, it suggests that interventions be directed towards those with intermediate knowledge.

The researchers warn that their confidence metric may not extend to issues other than scientific knowledge and surveys that harshly penalize incorrect replies. Individual and cultural differences were also detected in the study, which does not infer causation.

In light of potential construct differences, this paper advocates for more research on integrative metrics that can measure knowledge and confidence accurately.

Abstract:

Overconfidence is a prevalent problem and it is particularly consequential in its relation with scientific knowledge: being unaware of one’s own ignorance can affect behaviours and threaten public policies and health. However, it is not clear how confidence varies with knowledge. Here, we examine four large surveys, spanning 30 years in Europe and the United States and propose a new confidence metric. This metric does not rely on self-reporting or peer comparison, operationalizing (over)confidence as the tendency to give incorrect answers rather than ‘don’t know’ responses to questions on scientific facts. We find a nonlinear relationship between knowledge and confidence, with overconfidence (the confidence gap) peaking at intermediate levels of actual scientific knowledge. These high-confidence/intermediate-knowledge groups also display the least positive attitudes towards science. These results differ from current models and, by identifying specific audiences, can help inform science communication strategies.

References:

- Lackner, S., Francisco, F., Mendonça, C. et al. Intermediate levels of scientific knowledge are associated with overconfidence and negative attitudes towards science. Nat Hum Behav 7, 1490–1501 (2023)

- Harvey, Nigel. Confidence in judgment. Trends in Cognitive Sciences. 1 (2): 78–82. doi:10.1016/S1364-6613(97)01014-0 (1997)

- Johnson, Dominic D.P.; Fowler, James H. (2011) The evolution of overconfidence. Nature. 477 (7364): 317–320.