According to a recent study, the brain already modifies how objects on the retina are perceived when it is in our best interests to do so. In other words, we unconsciously see things distorted when it comes to our survival, well-being, or other interests.

Do our senses exist to give us the most accurate picture of the world, or are they more important for our survival? The former was the preeminent viewpoint in neuroscience for a very long time.

In the past 50 years, however, psychologists like Nobel laureates Amos Tversky and Daniel Kahnemann have demonstrated how selective and frequently incomplete human perception is. Experiments have confirmed there is a long list of cognitive biases. One of the most common is confirmation bias, which occurs when we process new information in a way that confirms our existing beliefs and expectations.

However, researchers have not been able to fully explain how and when these distortions enter the perceptual process.

Gabor Patch Orientation

A research team led by ETH Zurich Professor Rafael Polania and University of Zurich Professor Todd Hare has now demonstrated through a series of experiments that people perceive the same things differently when the decision context changes.

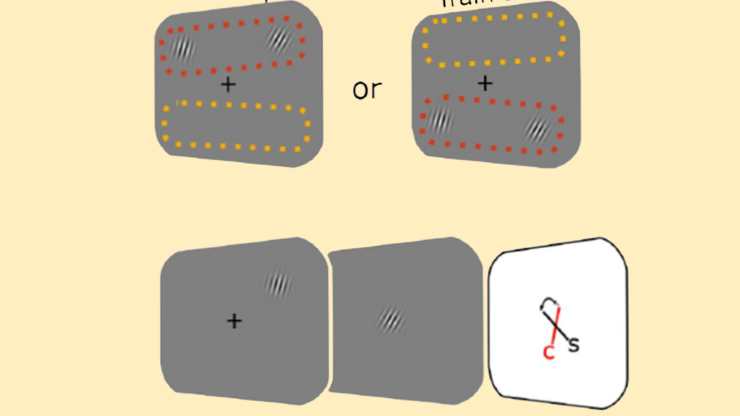

The 86 participants in the study were asked to compare two black-and-white striped patterns known as Gabor patches and say which was closer to a 45-degree angle. The goal was to accumulate as many points as possible.

They received 15 points for each correct answer in the first round. However, the decision context changed in the second round: whether the answer was correct or incorrect no longer mattered. Instead, the score gradually increased from 0 to 45 degrees. In both rounds, the participants saw the same pairs.

Changing Decision Contexts

The participants should have arrived at the same conclusion both times. This is due to the fact that when we look at something, our retinas convert the reflected light into visual information, which is then transmitted to our brain via nerve pathways.

The data is then compared to our prior knowledge and experience and processed to produce a three-dimensional image. In both rounds, the visual information was the same.

When the researchers assessed the experiment, they discovered that the participants had adjusted their perceptions in the second round in order to score the most points. There should be no differences between the two rounds if they saw the world objectively.

Perceptual Representations Adjusted

Participants’ assessments of the angles of the Gabor patches should have been consistent regardless of the decision context. However, this was not the case.

“People flexibly and unconsciously adjust their perceptions when it works to their advantage,”

Polania said.

Inferring that cognitive distortions are errors that cause us to make incorrect or irrational judgements and decisions, according to Polania and his co-authors, is missing the point.

Since our cognitive abilities are limited, it actually makes sense that we perceive the world in a distorted or selective way,

he said.

Lost in Perception

Our visual perception appears to be more heavily influenced by the potential utility of information than previously thought. In another experiment, the researchers demonstrated that our retinas already attempt to process information in the most efficient manner possible.

“As soon as we look at something, we try to maximize our own benefit. This means that cognitive bias starts long before we consciously think about something,

said Polania.

This is because perception obscures a lot of information. The brain is more efficient when it filters, prioritizes, and selects information as early as possible.

Utility Maximization Logic

A group of participants repeated the test with a variable score to determine when visual information is distorted. In contrast to the first experiment, the Gabor patch pairs were shown at the top of the visual test field.

After this training round, the real task started. The participants kept seeing a single Gabor patch at the top or bottom of the test area and had to guess the angle of the stripes.

Researchers found that people gave each patch a different score depending on whether it was at the top or bottom of the test field.

Subjects’ perceptions immediately adapted to the utility maximization logic they had used during the training round when they saw the patch at the top. When the patch appeared at the bottom, this was not the case.

AI and Human Perceptual Biases

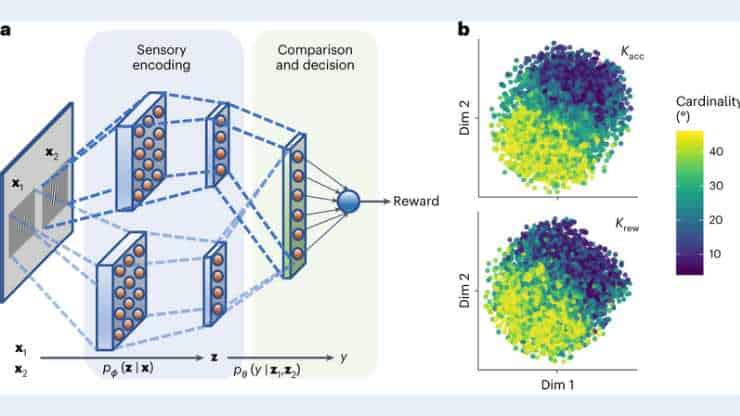

The authors of the study also tested these findings on an artificial intelligence (AI) agent that went through the same experiments as the human subjects.

To achieve the highest possible score in the experiment, the AI agent also stopped attempting to accurately represent the world when it began processing the information. The agent displayed the same perceptual biases as humans.

The study’s findings may also shed new light on the debate over biases in humans and AI agents. Perhaps the difficulty in identifying and correcting these distortions stems from the fact that they are an unconscious part of vision. They start working before we can even think about what we’re seeing.

The fact that our perception is designed to maximize utility rather than accurately represent the world makes things more difficult. Nonetheless, the study’s findings can also help us identify and correct biases in novel ways.

Reference:

- Schaffner, J., Bao, S.D., Tobler, P.N. et al. Sensory perception relies on fitness-maximizing codes. Nat Hum Behav (2023)