Echoic memory is a sensory memory that stores auditory information (sounds). After hearing an auditory stimuli, it is stored in memory so that it can be processed and interpreted.

Unlike most visual memories, where a person may choose how long to observe a stimulus and reassess it multiple times, aural stimuli are usually ephemeral and cannot be reassessed. Echoic memories are remembered for somewhat longer lengths of time than iconic memories (visual memories) since they are only heard once. Before the ear can process and interpret them, it must first receive each auditory impulse one at a time.

The echoic memory can be thought of as a “holding tank,” where a sound is unprocessed (or held back) until the next sound is heard, and only then can it be made meaningful. This sensory store can hold a large amount of auditory information for a short period of time (3-4 seconds).

Echoic sound resonates in the mind and is replayed for this small period of time after hearing it. Echoic memory encodes just the most basic features of inputs, such as pitch, which specifies localization to non-association brain regions.

Neuroscience of Echoic Memory

Auditory sensory memory has been determined to be stored in the primary auditory cortex, which is located contralateral to the presenting ear. Because of the various processes it is involved in, echoic memory storage involves numerous different brain locations.

The greatest number of brain regions implicated are concentrated in the prefrontal cortex, which houses executive function and is in charge of attentional control. The phonological store and rehearsal system appear to be left-hemisphere memory systems, based on increased brain activity in these locations.

The major regions involved are the left posterior ventrolateral prefrontal cortex, the left premotor cortex, and the left posterior parietal cortex.

Broca’s region is the primary site in the ventrolateral prefrontal cortex involved for verbal rehearsal and the articulatory process. The dorsal premotor cortex is involved in rhythmic organization and rehearsal, whereas the posterior parietal cortex is involved in object localization in space.

The precise localization of the cortical regions in the brain that are thought to be implicated in auditory sensory memory, as evidenced by the mismatch negativity response, remain unknown. However, findings have indicated that the superior temporal gyrus and inferior temporal gyrus exhibit comparable levels of activation.

Discovery Timeline

Scholars initiated an inquiry into the auditory domain’s equivalent of the visual sensory memory store shortly after George Sperling’s fragmentary report studies of it. 1967 saw the introduction of the term “echoic memory” by Ulric Neisser to denote this concise representation of acoustic data.

Initially, it was investigated employing partial report paradigms comparable to those utilized by Sperling. Contemporary neuropsychological techniques have facilitated the construction of approximations regarding the echoic memory store’s capacity, duration, and location.

Researchers continue to adapt Sperling’s findings to the auditory sensory storage using partial and whole report tests, utilizing Sperling’s model as an analogue. They observed that echoic memory can hold memories for up to 4 seconds.

However, varied lengths for how long the echoic memory maintains the information after it is heard have been proposed. Guttman and Julesz suggested that it may last approximately one second or less, while Eriksen and Johnson suggested that it can take up to 10 seconds.

Baddeley’s model of working memory consists of a visuospatial sketchpad, which is related to iconic memory, and a phonological loop, which attends to auditory information processing in two ways. The phonological storage is divided into two portions.

The first is the storage of words that we hear, which has the capacity to keep information for 3-4 seconds before decay, which is far longer than iconic memory (which has a lifespan of less than 1000ms).

The second is a sub-vocal rehearsal technique that uses one’s “inner voice” to keep the memory trace fresh. In our minds, the words repeat in a loop. This model, however, falls short of providing a detailed description of the relationship between initial sensory input and subsequent memory processes.

Deficit Impacts

Auditory memory problems in children have been linked to developmental language disorders. These issues are difficult to detect since poor performance may be due to an inability to understand a certain task rather than a difficulty with memory.

The mismatch negativity test was used to assess people who had suffered unilateral damage to the dorsolateral prefrontal cortex and temporal-parietal cortex following a stroke. The mismatch negative amplitude was greatest in the right hemisphere for the control group, regardless of whether the tone was provided in the right or left ear.

Mismatch negativity tasks, which use electroencephalography to detect changes in brain activation, are an independent task capable of testing auditory sensory memory. It captures auditory event-related potentials of brain activity induced 150-200ms following a stimulus. This stimulus is an unattended, infrequent, “oddball” or deviant stimulus provided in the middle of a sequence of standard stimuli, allowing the aberrant stimulus to be compared to a memory trace.

When the auditory stimulus was provided to the contralateral ear of the lesion side of the brain, mismatch negativity was considerably reduced in temporal-parietal injured patients. This is consistent with the hypothesis that auditory sensory memory is stored in the contralateral auditory cortex of ear presentation.

Further research on stroke victims with a low auditory memory store found that listening to music or audio books on a daily basis increased their echoic memory. This demonstrates that music has a good influence on neural rehabilitation following brain trauma.

Sensory Modality Comparison

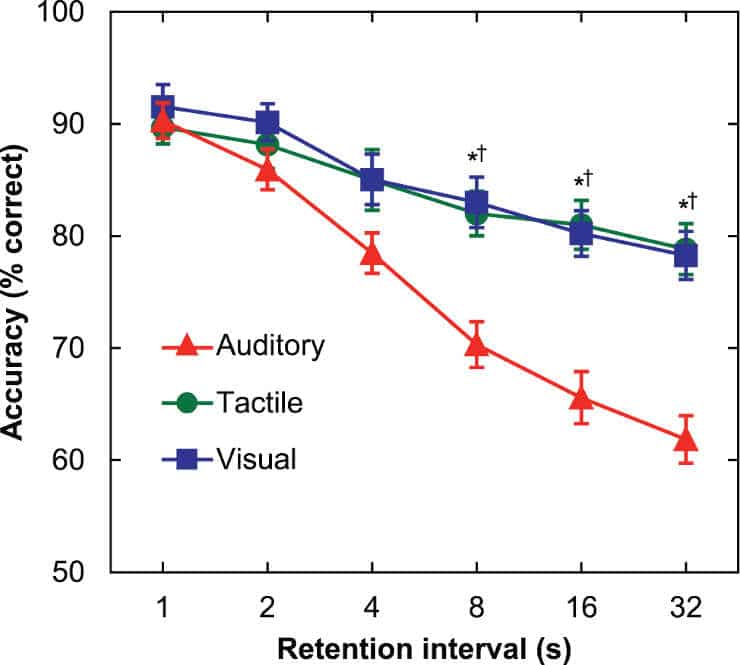

Researchers at the University of Iowa found in a 2014 study that when it comes to memory, we don’t remember things we hear nearly as well as things we see or touch.

“We tend to think that the parts of our brain wired for memory are integrated. But our findings indicate our brain may use separate pathways to process information. Even more, our study suggests the brain may process auditory information differently than visual and tactile information, and alternative strategies — such as increased mental repetition — may be needed when trying to improve memory,”

said Amy Poremba, associate professor in the UI Department of Psychology and corresponding author of the paper.

Poremba and lead author James Bigelow discovered that when more than 100 UI undergraduate students were exposed to a variety of sounds, visuals, and things that could be felt, the students were least apt to remember the sounds they had heard.

In one experiment, participants were allowed to listen to pure tones through headphones, look at different hues of red squares, and feel low-intensity vibrations by grasping an aluminum bar. Time delays varying from one to 32 seconds separated each series of tones, squares, and vibrations.

Although students’ memory declined across the board when time delays grew longer, the decline was much greater for sounds, and began as early as four to eight seconds after being exposed to them. While this appears to be a short period of time, Poremba compares it to forgetting a phone number that was not written down.

“If someone gives you a number, and you dial it right away, you are usually fine. But do anything in between, and the odds are you will have forgotten it,”

she explained.

In a subsequent experiment, Bigelow and Poremba evaluated participants’ memory with items they may encounter on a daily basis. Students listened to audio recordings of dogs barking, saw silent footage of a basketball game, and touched and held typical objects, such as a coffee mug, that were obscured from vision.

The researchers found that students’ memory for sounds was worse between an hour and a week later, while their memory for visual images and tactile things remained roughly the same.

Previous research has suggested that humans may have superior visual memory, and that hearing words associated with sounds — rather than hearing the sounds alone — may aid memory. Bigelow and Poremba’s study builds upon those findings by confirming that, indeed, we remember less of what we hear, regardless of whether sounds are linked to words.

The study is also the first to demonstrate that our capacity to recall what we touch is nearly equal to our ability to recall what we see. The discovery is significant since studies with non-human primates such as monkeys and chimps have revealed that they excel at visual and tactile memory tests but suffer with auditory activities.

Based on these observations, the authors believe humans’ weakness for remembering sounds likely has its roots in the evolution of the primate brain.

References:

- Alain, Claude; Woods, David L.; Knight, Robert T. (1998). A distributed cortical network for auditory sensory memory in humans. Brain Research. 812 (1–2): 23–37. doi:10.1016/S0006-8993(98)00851-8

- Baddeley, Alan D.; Eysenck, Michael W.; Anderson, Mike (2009). Memory. New York: Psychology Press. ISBN 978-1-84872-000-8

- Bigelow, J.; Poremba. A. (2014) Achilles’ Ear? Inferior Human Short-Term and Recognition Memory in the Auditory Modality. PLoS ONE, 9 (2): e89914 DOI: 10.1371/journal.pone.0089914

- Bjork, Elizabeth Ligon; Bjork, Robert A., eds. (1996). Memory. New York: Academic Press. ISBN 978-0-12-102571-7

- Darwin, C; Turvey, Michael T.; Crowder, Robert G. (1972). An auditory analogue of the sperling partial report procedure: Evidence for brief auditory storage. Cognitive Psychology. 3 (2): 255–67. doi:10.1016/0010-0285(72)90007-2

- Eriksen, Charles W.; Johnson, Harold J. (1964). Storage and decay characteristics of nonattended auditory stimuli. Journal of Experimental Psychology. 68 (1): 28–36. doi:10.1037/h0048460

- Radvansky, Gabriel (2005). Human Memory. Boston: Allyn and Bacon. ISBN 978-0-205-45760-1

- Sabri, Merav; Kareken, David A; Dzemidzic, Mario; Lowe, Mark J; Melara, Robert D (2004). Neural correlates of auditory sensory memory and automatic change detection. NeuroImage. 21 (1): 69–74. doi:10.1016/j.neuroimage.2003.08.033

- Särkämö, Teppo; Pihko, Elina; Laitinen, Sari; Forsblom, Anita; Soinila, Seppo; Mikkonen, Mikko; Autti, Taina; Silvennoinen, Heli M.; et al. (2010). Music and Speech Listening Enhance the Recovery of Early Sensory Processing after Stroke. Journal of Cognitive Neuroscience. 22 (12): 2716–27. doi:10.1162/jocn.2009.21376

- Schonwiesner, M.; Novitski, N.; Pakarinen, S.; Carlson, S.; Tervaniemi, M.; Naatanen, R. (2007). Heschl’s Gyrus, Posterior Superior Temporal Gyrus, and Mid-Ventrolateral Prefrontal Cortex Have Different Roles in the Detection of Acoustic Changes. Journal of Neurophysiology. 97 (3): 2075–82. doi:10.1152/jn.01083.2006