Two distinct brain regions are essential for integrating semantic information while reading, recent research from the University of Texas Health at Houston suggests. The finding may help explain why people with aphasia struggle with semantics.

Language relies heavily on the incorporation of vocabulary across multiple words in order to derive semantic concepts, such as truth statements and references to objects and events. However, it is unknown how individuals incorporate semantic information into their reading.

“Typically, we take pieces from different words and derive a meaning that’s separate. For example, one of the definitions in our study was ‘a round red fruit’ — the word ‘apple’ doesn’t appear in that sentence, but we wanted to know how patients made that inference. We were able to expose the dynamics of how the human brain integrates semantic information, and which areas come online at different stages,”

said Elliot Murphy, Ph.D.

Murphy, a postdoctoral research fellow in the Vivian L. Smith Department of Neurosurgery at McGovern Medical School at UTHealth Houston, and Nitin Tandon, MD, a professor and chair ad interim of the department at the medical school, served as the study’s first and second authors, respectively.

Referential vs Non-referential Descriptions

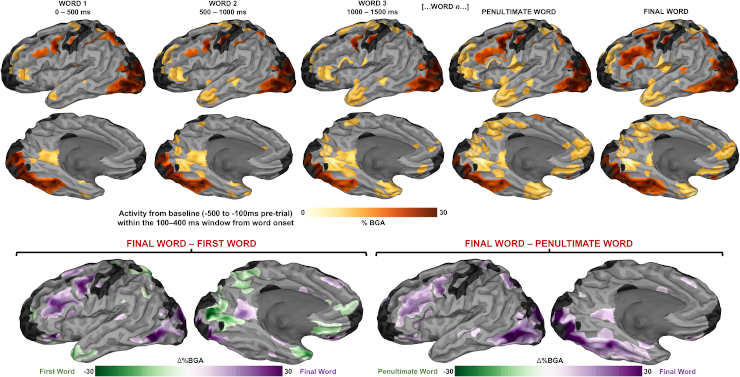

Responses to orthographic stimuli (averaged across the 100–400 ms window after the presentation of each word), relative to baseline (−500 ms to −100 ms before first word onset) represented using a surface-based mixed effects multi-level analysis (SB-MEMA) (thresholded at t > 1.96, patient coverage ≥3, p < 0.01).

Credit: Nat Commun 14, 6336 (2023). doi: 10.1038/s41467-023-42087-8

The researchers studied intracranial recordings in 58 epilepsy patients who read written word definitions, which were either referential or non-referential to a common object, as well as phrases that were either coherent (“a person at the circus who makes you laugh”) or incoherent (“a place where oceans shop”).

Sentences were displayed on the screen one word at a time, and researchers concentrated their analysis on the time window when the final word in the sentence was displayed.

They discovered, in general, that within a narrow window of quickly cascading activity, distinct regions of the language network demonstrated meaning sensitivity.

Specifically, they discovered the existence of complementary cortical mosaics for semantic integration in two areas: the posterior temporal cortex and the inferior frontal cortex. The posterior temporal cortex is activated early on in the semantic integration process, while the inferior frontal cortex is particularly sensitive to all aspects of meaning, especially in deep sulcal sites, or grooves in the folds of the brain.

Aphasia Insights

Murphy stated that these findings may shed light on the inner workings of aphasia, a disorder characterized by difficulty understanding and expressing spoken and written language. It can develop gradually as a result of a developing brain tumor or disease, or it can manifest abruptly following a stroke or head injury.

While they are able to understand individual words, people with aphasia frequently struggle with semantic integration, which prevents them from drawing further semantic conclusions.

“Both the frontal and posterior temporal cortexes disrupt semantic integration, which we see happen in individuals with various aphasia. We speculate that this intricately designed mosaic structure makes some sense out of the varying semantic deficits people experience after frontal strokes,”

Murphy said.

Abstract

Language depends critically on the integration of lexical information across multiple words to derive semantic concepts. Limitations of spatiotemporal resolution have previously rendered it difficult to isolate processes involved in semantic integration. We utilized intracranial recordings in epilepsy patients (n = 58) who read written word definitions. Descriptions were either referential or non-referential to a common object. Semantically referential sentences enabled high frequency broadband gamma activation (70–150 Hz) of the inferior frontal sulcus (IFS), medial parietal cortex, orbitofrontal cortex (OFC) and medial temporal lobe in the left, language-dominant hemisphere. IFS, OFC and posterior middle temporal gyrus activity was modulated by the semantic coherence of non-referential sentences, exposing semantic effects that were independent of task-based referential status. Components of this network, alongside posterior superior temporal sulcus, were engaged for referential sentences that did not clearly reduce the lexical search space by the final word. These results indicate the existence of complementary cortical mosaics for semantic integration in posterior temporal and inferior frontal cortex.

Reference:

- Murphy, E., Forseth, K.J., Donos, C. et al. The spatiotemporal dynamics of semantic integration in the human brain. Nat Commun 14, 6336 (2023). doi: 10.1038/s41467-023-42087-8